AI Under the Hood: Culture Intelligence

Imagine a sophisticated chatbot that can quickly help you and your organization intuitively map your values. Now jump in as we test drive Culture Intelligence’s new Culture DNA Copilot.

In our first “AI Under the Hood” interview I sit down with Peter Brattested Lillevold, Technical Advisor and former CTO of Culture Intelligence to discuss their new Culture DNA and AI Copilot products. Peter shows how the Copilot works in real time and then shows us what’s going on “Under the Hood” of their LLM including an in-depth explanation of their RAG architecture for culture-specific language models.

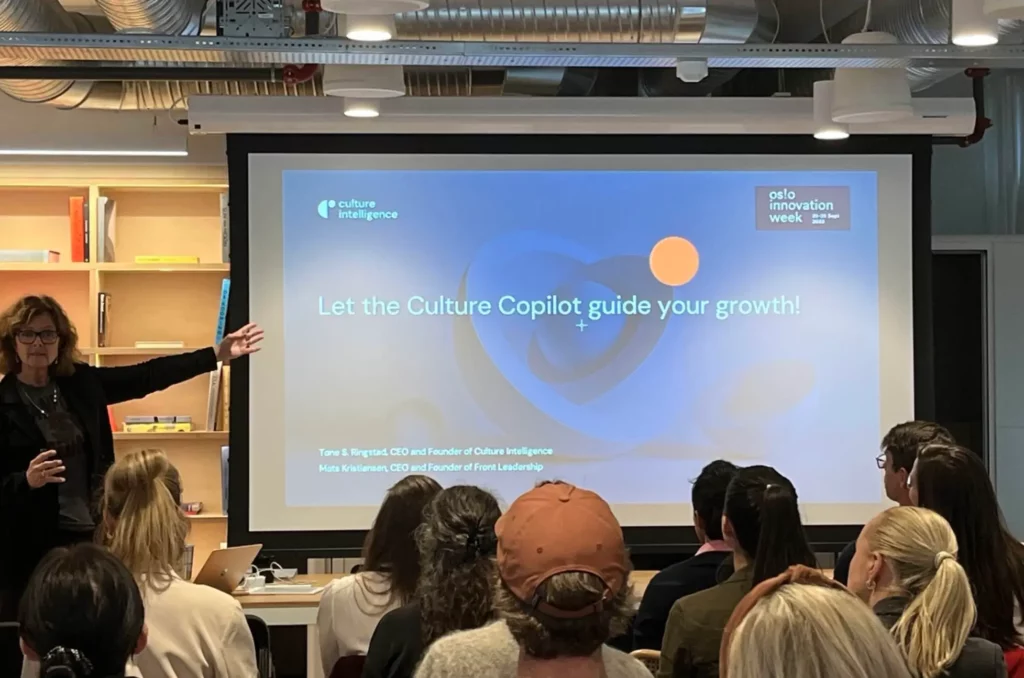

Thymn Chase: We met at Oslo Innovation in September 2023. Your Culture Intelligence session caught my eye because Black Label are data visualization specialists and we also have a very strong values-driven culture – so I thought there would be an immediate connection. I had come straight from an AI hackathon at Databutton where we were brainstorming how to build a product to connect AI and dataviz. So it was amazing to walk across town and drop into your Culture Intelligence session and hear you were launching an AI Copilot which helps organizations map their culture and delivers value-based and dataviz-rich insights. I’m really curious to hear how the Copilot has evolved and where you are now in your AI journey?

Peter Brattested Lillevold: Yeah, absolutely. It quickly became obvious to me when I began working on our Culture Intelligence products that AI had a role here in terms of automating more of the things that consultancy and advisory guys have traditionally done. In projects with companies talking about what they do, what their values are, and talking about the data related to what their organization actually looks like according to surveys. That’s really where the AI Roadmap started to play out. How can we automate at least some of that expertise into the application so that users can ask their weird questions and get suggestions and advice from the Copilot. So that’s really where it all started.

TC: I love that you guys encourage weird questions about values. There’s so much about company culture, the idiosyncrasies and the weird stuff, that makes people and companies unique.

PBL: This is really where it would be very difficult to try and build something just based around a traditional knowledge base with articles. You only get so far and with that approach. But the explosion in LLM technology and seeing how far ChatGPT has come in the last year convinced us that it really made sense for us to start building a prototype to see how good this technology could be in our space.

TC: Cool

PBL: So, should we try to ask our Copilot some weird questions?

TC: Absolutely.

Watch Peter & Thymn Test Drive the AI Copilot

Read the Full Transcript of Peter & Thymn’s Copilot Test Drive Below:

PBL: What you see here is actually our Culture DNA application and we are enabling Copilot in that application now. Currently it’s at the outset and looks like an application where I can ask really anything. I guess I have already asked some weird questions here.

TC: So this is built on top of ChatGPT?

PBL: We need to clarify some terms here. I guess ChatGPT is a specific product provided by OpenAI but under the hood we are definitely using the same services. It’s built on the same architecture, but probably also expanded a bit on how we’re using their services. So I’ll go through that in more detail afterwards.

TC: Okay, so what does the Copilot do? Is it supposed to help decide which type of roles and personas we need? Is it built to help?

PBL: So the first version, it’s really aimed at trying to answer business culture related questions. So this is where you would see that it’s a different experience from what you would get from ChatGPT or even Microsoft Copilot. Here we are combining our expert knowledge with the general language models. So we’re using the same language model in order to shape responses, because that’s what they are really powerful at doing, but then also combining that with a knowledge base that we provide in our system. This is knowledge that ChatGPT doesn’t have access to. That’s our IP. So this is where you see how we are plugging those general services into our domain and making it something that is more integral to the application that we’re delivering.

TC: Makes sense. So can you use this Copilot, if you haven’t already, to let’s say map the values of your organization?

PBL: Yeah, so that’s where we started. It’s still a general chat experience within that domain so really our next phase for the Copilot is to connect it with the actual data. When we do that, we will introduce more of the traditional AI algorithms in order to do categorization and comparisons between one company and another… perhaps one team inside your organization with another, but then using machine learning to make those comparisons more relevant. And then we combine that with using GPT and large language models in order to describe those traits that you would see in your culture. So that’s really the next step we are taking for the Copilot.

We’re bringing in all the expertise that we have and making that available to all the users. Primarily it will be useful for those in your organizations that would be working with culture projects or perhaps managing your workforce. Plus leadership, definitely, because with culture we believe that it’s truly integral to onboard leadership, to get them to use the tool is crucial. So this will be available for them as well as all other users. Even if you’re just participating and want to learn more, you can jump in and ask as many questions as you like.

TC: Okay, very cool. So, I mean I could ask kind of a general question, like what do you have going on?

PBL: Yeah, so I just tried to test how good it is at creating a development program. And in this case it can come up with a three week-long development program for improving innovation in my team. If you have a weird question as well, we can try that…

TC: Yeah, I thought of a basic question. Oh wow, that was quick!

PBL: Yeah, so here it is. Really, a fairly fast performance is something that we have been working on. It’s still a challenge. I would say the underlying technology is there, but the models are improving and the services are improving. These are getting pretty fast. I would say they are very useful in kind of these chat experiences where you need it to be relatively responsive. So here it came up with a suggestion to a three week program, which is good because we’ve had some scenarios where I asked for a three week or a specific list of items and it has created more items.

Here it suggests that we should be focusing on innovation. It includes addressing basic needs such as providing support for their home situation and allowing flexibility. That sounds good. The systems which are relevant to teams, possibly Healthcare, should be taken care of. I think that’s probably more general advice. Once these foundational needs are met, you can initiate the need for innovation and encourage your team to work together in a new way. So, in the first week, address foundational needs and the immediate concerns, provide support systems and resources, and foster a respectful and inclusive team.

Then, week two, innovation mindsets – here’s where you get into the mindset of innovation. Introduce the concept of innovation and the importance for the team, encourage curiosity and a willingness to explore new ideas. I know these come from the values in the data that we provide. So, back to innovation, there are values called curiosity and exploration… so, it’s shining through here that this is built on those values.

TC: Mm-hmm

PBL: Courage and risk-taking. Letting your team members feel that they are empowered to challenge the status quo. So, week three, collaboration and learning. These are values from our model that are useful in innovative teams and in the innovation mindset. Facilitate collaboration, encourage continuous learning and skill development, foster a culture of inspiration and vision where the team members understand the value of their work.

TC: Mm-hmm

PBL: So yeah, this is kind of one of the examples that we like to show off. If you want to get some advice, like what we should do for our team, here you can actually ask the Copilot to suggest a program for you.

TC: What is interesting is that I think you focused on innovation as the key value to improve is that right? Because I’m curious, for example, if I can ask it something like: What are some values that we need to introduce in our company to be more innovative – to come from the other direction. Would it help us with that?

PBL: Let’s see. What are some values that we need to introduce in our company?

TC: To increase innovation or to become more innovative.

Peter: Yeah, we can try that. Alright, so, let’s see… “To increase innovation in your company it’s important to introduce values that foster culture, creativity, exploration, and change,” and these are again values from our data model… and here it goes into a bit more detail: “Creativity encourages employees to think outside the box. Exploration is about trying new ways and new solutions, being curious. Change is where you actually thrive in change, not everyone thrives in change.”

TC: This is a tough one.

PBL: It’s a tough one for some of us definitely, myself included.

TC: Definitely. I think everyone in Tech over the last 18 months would say that they’ve been struggling with this one, with AI coming to the forefront of every conversation.

PBL: Yeah, exactly. It really has introduced change and uncertainty. Is my job safe or will I still be writing software a year from now? So change, I guess, is also important and to create an innovative culture you need to be comfortable with change. Innovation itself is to look for new ways of doing things, by experimenting, taking risks, learning from failures. So that’s innovation. So, I mean your company would benefit from all four of these, by focusing on those four values.

TC: This is great. I think specifically, our R&D team, they’re going through this process on a regular basis, but I think focusing specifically on these values would be really helpful. So immediately I’ll be able to deliver some value for them. This is really cool.

PBL: Very cool.

TC: I’m curious, maybe you can also give us a little bit of a peek at what’s going on behind the scenes of the Copilot, or maybe some of the other tools you guys are developing?

PBL: So, you know, on the surface here, it seems simple. You put a question in and you get an answer back. So let’s jump over and see what it looks like, at least at the conceptual level, it matches fairly well with the actual tech behind the scenes.

Watch Peter Show Thymn the AI Under the Hood of the Copilot

Read the Full Transcript of Peter’s AI Under the Hood Demo Below:

PBL: Okay, first off, I just want to go from that screen where you have the question, and you’re asking the question. The first thing that happens is that we hand that off to our API. So the application obviously has an API, and the nature of these LLM technologies is that they still take some time to process and there’s things that you want to do as part of that process. So it immediately makes sense to put that in the background processing queue. So that’s really the first thing that happens. In addition to that, of course, we store your question but then we queue this pre-processing. And so the first part here is really just a very classic application architecture, where you have a queue, and you have some way of processing this in the background.

This is an important thing, because it will take, you know, a few seconds to actually provide the complete answer. So we bring this over into the processing part where we actually start to analyze your question. It’s a relatively simple setup when it comes to the infrastructure. So we’re just hosting this using Container Apps in Azure. And the thing that’s running in the container app here is really Python scripts. So we’re going from Azure Functions, over to Container apps, but it kinda illustrates also how our teams have been set up now. So, on the application side, it’s a .NET application, we’re using C# and .NET. While on the AI side, we really wanted to enable the data scientists to help us out with testing out the prompts and all those things. And it made sense to allow them to work in Python, where they are most familiar, instead of, you know, forcing them to learn a completely new toolset over in .NET. So here, we’re actually hosting Python code in the container app.

Of course, there are two critical components in this architecture and the first is a vector database. So for that we’re using the Azure Cognitive Search service. And then we’re using Azure OpenAI to host the LLM models. Right now we’re running on the GPT 3.5 Turbo 16k model. So it sounds super fast, and it’s definitely faster than 3.5. The next iteration, we’re moving up to 4.5 and there’s a Turbo version of that as well. I believe it’s in preview right now. But, you know, these kinds of things are still moving pretty fast. The models are increasingly better at reasoning about a lot of different topics. So that’s what you would get with moving from one version to another. It has started becoming better at code, it could be better at math and all these things. So, this is the main architecture and it is so simple that we should move on to look at the inner workings of it. The actual flow of the question, right? This is where it starts to get interesting, I believe. So here is the request that I was talking about… and this was the question I asked previously. So what we do here is, in addition to the question that you asked, we also incorporate a bit of the history of the previous questions and answers that you have given.

TC: That’s just per user, right?

PBL: Yeah, this is per user. Okay, so this is personal data, and we’re storing this related to you as a user. We’re not sharing this across users. We are looking at use cases where you might want to collaborate with your team, for example, and share these things across teams. But for now, it’s strictly related to your user account. Okay. So moving over here, the first step here is to formulate the real question that you’re going to ask the LLM. So this is where we take the question that you put in the chat history and we use the LLM to formulate a new question that will be the thing that we’re bringing in further down the pipeline. So, with each instance of us calling the LLM here, which is hosted by OpenAI, this is where we see that you can introduce these prompts that everyone is talking about: prompt engineering – i.e. a prompt being used. So, this is now the first prompt that our application is using with the LLM. So, given the following conversation and the follow up question, rephrase the follow up question to be a standalone question. And no matter what language the question is in, the standard long question should be in English. So this is kind of using the LLM to pre-process the question that you are asking into something that is more suitable for the LLM and combined with the chat history.

TC: So, I would imagine that this is kind of a standard step in a lot of apps that are built on top of the OpenAI LLM.

PBL: Yeah, so I should mention that this kind of application is really called a RAG – a Retrieval Augmented Generation approach.

TC: We sure know about RAGs at Black Label as we’re working on developing a few for our clients.

PBL: So, this is more or less straight out of the textbook, in our LangChain examples, we’ll show you more or less the same flow of the questions and using the LLM throughout the pipeline here. So now we move into the retrieval step of the RAG. So, using that standalone question, I can now use that and make a query into the vector store. So here is really where you would see that, and this is our data, so the vector store is now where our knowledge is stored. In the articles we have descriptions of our culture values, and the mindsets and all that, and this goes into this vector store.

TC: So this is proprietary then?

PBL: Yes. So what’s really key to the RAG architecture is to take the LLM, which is general and trained on a lot of open data, and to allow that to actually use your knowledge, your application or the domain that you are developing, combining that into something that in our case will now be culture specific. Right. So based on the standalone question, we’re now doing a lookup on similarity. We’re looking for documents that seem to be related to the question that you’ve asked, asking and pulling that out of the vector store. Then, we’re combining those documents – let’s call them documents with the original question, OK? Then we construct a new prompt, and this is a bit more elaborate. But this is where you see that you’re kind of shaping the personality of the Copilot, the Culture Copilot. So the prompt here says that, as the Culture Copilot, you assist with inquiries related to the nuances of business culture, use the context and the information from our knowledge base and chat history to help you form your responses accurately.

So it’s already instructing the compiler how it should respond. And “please adhere to the following guidelines if the provided information is not sufficient to the answer and indicate that there is insufficient information”. So initially, without this clause, for example, it would just say, “I don’t have enough information in the context”, period. But why again? It’s really not a very, you know, friendly experience, you really feel that you’re talking to a machine, which of course you are. But you can really build on this prompt to shape it into a more normal, human-like, ChatGPT experience.

TC: So, this is normal syntax engineering as well?

PBL: Yeah, I would liken this to a programming language. So here it is really instructions for the language model, how it should generate the response, you know, that it should start the answer directly, without repeating the question. I’ve seen a lot of examples where it always repeats my question, and it kind of gets annoying, it’s not a natural way to have a conversation. So here we instructed it not to do that, right, to always respond in the language of the question. So, we added this, because then I can actually ask in Norwegian or Swedish or whatever language, it will just adopt that language. When it formulates the response, it provides elaborate, nuanced and informed answers highlighting relevant details. If the question is not clear, ask for clarification. If the question is not relevant to the context, that means relevant to culture, or business culture, it would actually ask a follow up question to clarify. if you cannot answer based on the provided context, clarify that you can only assist with inquiries related to business culture.

So this final part also took that, you know, very technical response. I cannot answer based on providing context, it now will say that, you know, I’m only able to answer questions related to business culture, for example. And then the parts below here, you would provide the additional context, which is the output of the standalone prompt, and the chat history and the original question. So, a bit of a mouthful , but this is really the core of our chat experience now, and then we feed that back into the LLM. And then we get the final answer from that original question. So now, we’re actually done with the actual answer. And that’s the other thing that we will provide back to the application. What we do in addition to that, which is still not enabled in the end user experience right now, but what you will see, and I think this is pretty important, at least when it comes to a chat experience like ours, is that users would quite quickly come back to us and say, “but how did it come up with this answer?” You know, where did it take the correct knowledge from? From the internet or…

TC: Yeah, yeah. So “why should I trust you?”

PBL: Exactly. So it quickly became obvious that we should start to provide some attributions, these are things where you say “here, you can go and read more about this, here is where we took some of this knowledge from.” This makes a lot of sense in applications that are plugging this into the user experience, because a lot of the knowledge that we are building on is ours, right? So it’s not something that you would find on the internet, necessarily. This is part of our IP, and there are definitions in the application, right? Well, we want to supply those references in order to know which values were part of this response.

So, in the example we did just earlier, we listed these four values, they actually reference our entire model, with specific descriptions of these values, and they have very clear definitions. And those definitions, they are part of the knowledge base that the LLM is now getting access to via the context.

Make sure to test out Culture Intelligence’s new Culture DNA Copilot for yourself today. It is a whole new dimension of organizational value mapping!